Algorithm will only become better and better at predicting people’s preference. But is it a benefit, or something gradually kills humanity?

In Netflix’s documentary “The Social Dilemma”, it talks about how the tech industry is stealing our information and using the AI algorithm to completely take control over the content we are viewing to make profit.

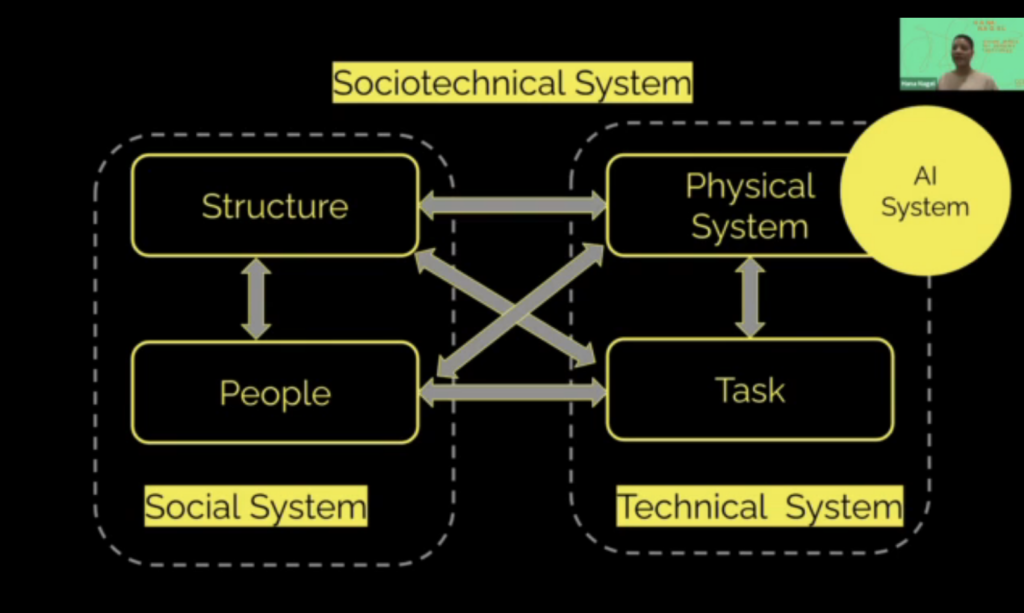

Hana Nagel, who currently works as a service designer for Element AI, came to give a lecture at CCA around ethical AI. Her speech inspired me to make a prediction on the ideal version of future IoT.

She mentioned the guideline given by the Organisation for Economic Co-operation and Development (OECD). They defined that an ethical technology system should meet 5 basic principles:

- AI systems should employ inclusive growth, sustainable development and pursuit of well-being

- AI systems should respect human-centered values and fairness.

- AI systems should commit to transparency and explainability to stakeholders, providing plain and easy-to-understand information.

- AI systems should be robust, secure and safe

- AI actors should be accountable

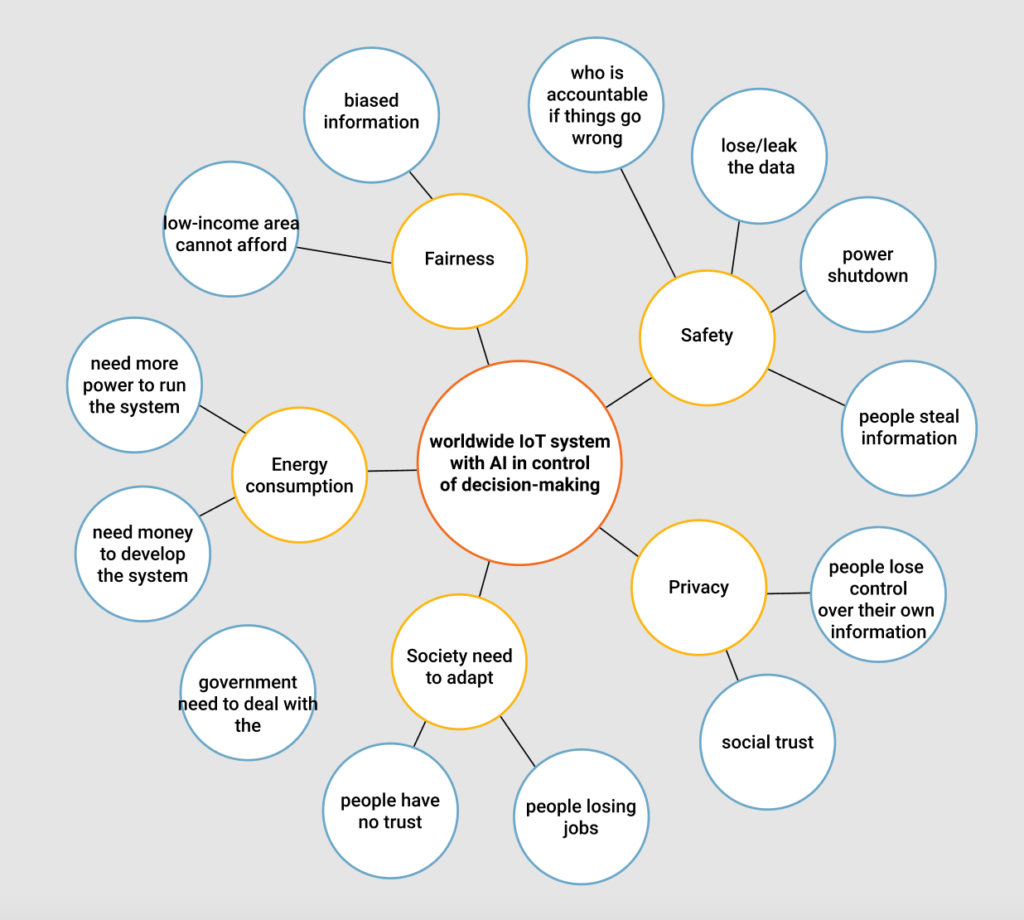

In the lecture, she mentioned the “How might we” statement for traditional Interaction design will not be enough to adapt for future technology advent. Instead, we should use “What happens if we” statement to try to map out the unknown consequences. I found this an overlap with the “future wheel” diagram.

From the diagram, we can see most issues are around safety and privacy. Since the uncertainty around safety and privacy issues can hold back a lot of users in adopting new technology, the primary job for future interaction designers will be to make the complicated algorithm transparent and comprehensible to normal users in order to enhance their feeling of security.

- Designers should enable users see the process of information.

Just as some restaurants choose to build an open kitchen right next to the dining area, the process helps to build trust between the host and the guests, making the guests tend to feel more satisfied and secured with what they eat, thus having a better experience. Similar to the case of designing interfaces, designers should try their best in making clear of how the system works.

For example, when AI is making a prediction/recommendation based on the user’s data cloud, there needs to be a clear indicator of what information is used to generate the result. Instead of seeing the code that controls the algorithm, users should see some simple and clear illustrations that are comprehensible for people varies in age, area and cultures.

2. Designer need to give easy control to the users.

Although users may not have full control over the process of information, basic controls should be reserved and highlighted on the interface.

In the future where more and more personal information will be shared out from the users to a public network, it becomes even crucial that the user will have control over what information can be used for what scenario.

Here is a sketch illustrating those 3 levels. Designers should try their best to bring the users to level 3, regardless of what technology we employ.

It is really true that we need rules for AI. We really need to consider carefully what access we can give AI and make sure that AI is under full control. Also, sharing eco becomes more and more popular and that, for sure, will become a trend in the future. I appreciate how you show the lessons that help designers to create a better experience for shared eco.